- Today

- Holidays

- Birthdays

- Reminders

- Cities

- Atlanta

- Austin

- Baltimore

- Berwyn

- Beverly Hills

- Birmingham

- Boston

- Brooklyn

- Buffalo

- Charlotte

- Chicago

- Cincinnati

- Cleveland

- Columbus

- Dallas

- Denver

- Detroit

- Fort Worth

- Houston

- Indianapolis

- Knoxville

- Las Vegas

- Los Angeles

- Louisville

- Madison

- Memphis

- Miami

- Milwaukee

- Minneapolis

- Nashville

- New Orleans

- New York

- Omaha

- Orlando

- Philadelphia

- Phoenix

- Pittsburgh

- Portland

- Raleigh

- Richmond

- Rutherford

- Sacramento

- Salt Lake City

- San Antonio

- San Diego

- San Francisco

- San Jose

- Seattle

- Tampa

- Tucson

- Washington

Pentagon Threatens Anthropic Over Refusal to Develop 'Killer Robots'

U.S. Defense Department considers 'supply chain risk' designation for AI company over ethical AI principles.

Published on Feb. 18, 2026

Got story updates? Submit your updates here. ›

The U.S. Department of Defense is reportedly considering severing ties with AI company Anthropic and designating it as a 'supply chain risk' due to the company's refusal to allow its technology to be used in the development of autonomous 'killer robot' weapons systems. This move comes after Anthropic published a new 'Constitution' for its flagship AI model, Claude, outlining ethical principles to prevent the technology from being used in ways that undermine human oversight.

Why it matters

The Pentagon's hardline stance against Anthropic's ethical AI principles puts the U.S. government at odds with the Vatican, which has long voiced opposition to the development of autonomous weapons systems. This clash highlights the growing tension between the military's desire for advanced AI capabilities and the ethical concerns around the use of such technology in weapons.

The details

Anthropic, a San Francisco-based public benefit corporation, has refused to allow the Pentagon to use its Claude AI platform in the design or deployment of autonomous weapons systems, known as Lethal Autonomous Weapons Systems (LAWS) or 'killer robots'. In response, the Pentagon is reportedly considering designating Anthropic as a 'supply chain risk', which would mean any company doing business with the U.S. military would have to sever ties with Anthropic. This would cause significant disruption across the AI industry, as Anthropic's technology is integrated into many military systems.

- In late January 2026, Anthropic published a new 'Constitution' for its Claude AI model, outlining ethical principles to prevent the technology from being used in ways that undermine human oversight.

- On January 12, 2026, U.S. Secretary of Defense Pete Hegseth unveiled the Pentagon's Artificial Intelligence Acceleration Strategy, which called for the use of AI models 'free from usage policy constraints that may limit lawful military applications'.

- On January 9, 2026, Hegseth issued a memorandum directing the Department's Chief Digital and Artificial Intelligence Office to establish benchmarks for 'model objectivity' as a primary procurement criterion within 90 days, and to incorporate 'any lawful use' language into DoD contracts within 180 days.

The players

Anthropic

A San Francisco-based public benefit corporation dedicated to the safe and ethical development and use of AI.

Pete Hegseth

U.S. Secretary of Defense, who has unveiled the Pentagon's Artificial Intelligence Acceleration Strategy and issued a memorandum directing the Department to establish benchmarks for 'model objectivity' and incorporate 'any lawful use' language into contracts.

Pope Leo XIV

The current Pope, who has highlighted the danger of AI weapons systems and called for appropriate and ethical management of AI, along with regulatory frameworks focused on the protection of freedom and human responsibility.

Sean Parnell

Chief Pentagon spokesman, who confirmed that the Department's relationship with Anthropic is under review.

What they’re saying

“We must not employ AI models which incorporate ideological 'tuning' that interferes with their ability to provide objectively truthful responses to user prompts.”

— Pete Hegseth, U.S. Secretary of Defense (Crux)

“There is a danger of returning to the race of producing ever more sophisticated new weapons, also by means of artificial intelligence. The latter is a tool that requires appropriate and ethical management, together with regulatory frameworks focused on the protection of freedom and human responsibility.”

— Pope Leo XIV (Crux)

“It will be an enormous pain in the ass to disentangle, and we are going to make sure [Anthropic] pay[s] a price for forcing our hand like this.”

— Senior Pentagon Official (Axios)

What’s next

The judge in the case will decide on Tuesday whether or not to allow Anthropic out on bail.

The takeaway

This clash between the Pentagon's desire for advanced AI capabilities and Anthropic's ethical principles highlights the growing tension between military priorities and the need for responsible AI development. It also puts the U.S. government at odds with the Vatican's longstanding opposition to autonomous weapons systems, raising concerns about the future of AI regulation and the protection of human dignity.

San Francisco top stories

San Francisco events

Feb. 18, 2026

Van MorrisonFeb. 18, 2026

Sam Smith - To Be Free: San Francisco 2/18Feb. 18, 2026

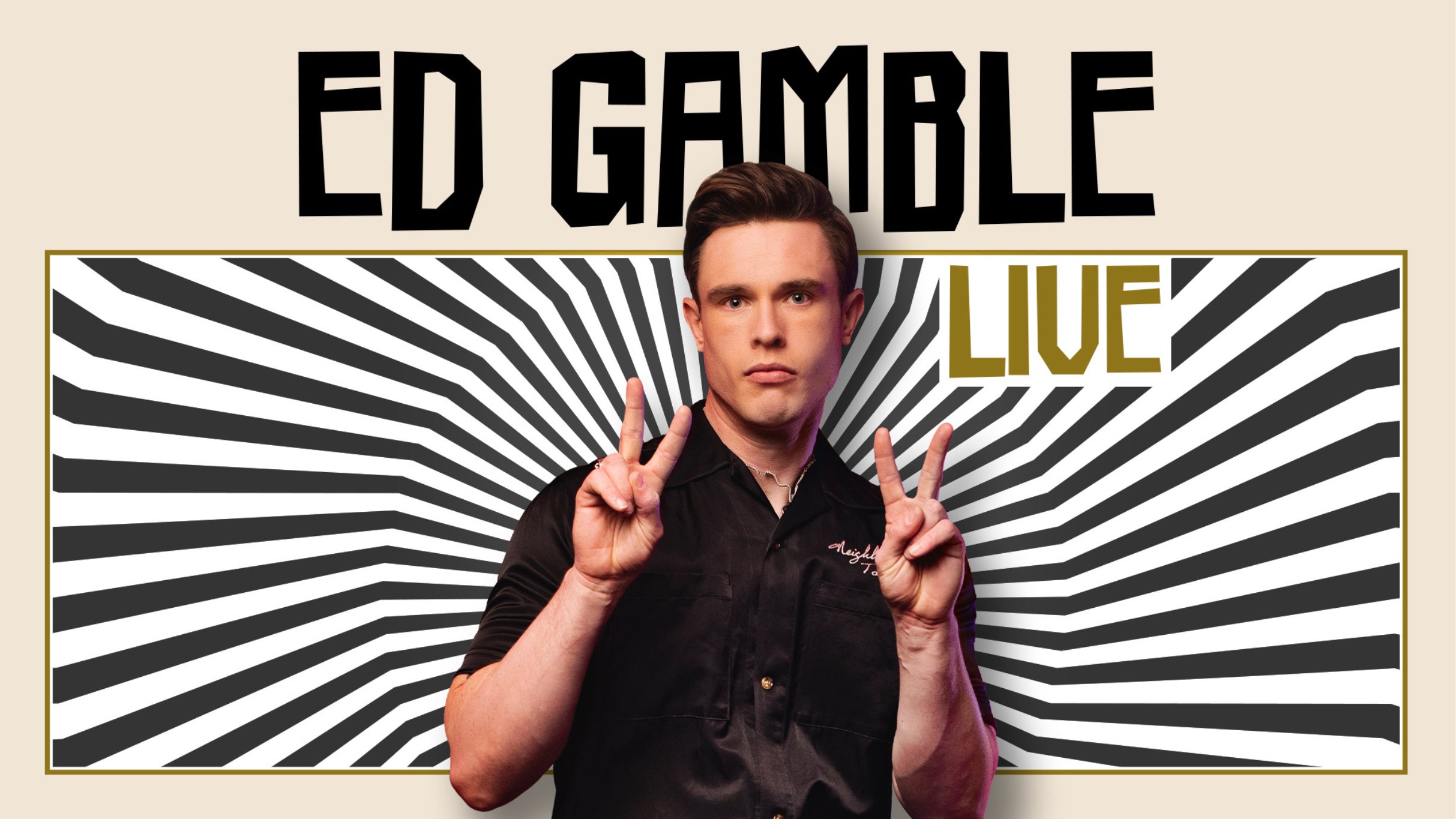

SF Sketchfest Presents: Ed Gamble - Live