- Today

- Holidays

- Birthdays

- Reminders

- Cities

- Atlanta

- Austin

- Baltimore

- Berwyn

- Beverly Hills

- Birmingham

- Boston

- Brooklyn

- Buffalo

- Charlotte

- Chicago

- Cincinnati

- Cleveland

- Columbus

- Dallas

- Denver

- Detroit

- Fort Worth

- Houston

- Indianapolis

- Knoxville

- Las Vegas

- Los Angeles

- Louisville

- Madison

- Memphis

- Miami

- Milwaukee

- Minneapolis

- Nashville

- New Orleans

- New York

- Omaha

- Orlando

- Philadelphia

- Phoenix

- Pittsburgh

- Portland

- Raleigh

- Richmond

- Rutherford

- Sacramento

- Salt Lake City

- San Antonio

- San Diego

- San Francisco

- San Jose

- Seattle

- Tampa

- Tucson

- Washington

Washington Considers Requiring AI Chatbots to Add Mental Health Safeguards

New legislation would mandate disclosure requirements and suicide prevention protocols for AI assistants.

Published on Feb. 12, 2026

Got story updates? Submit your updates here. ›

The state of Washington is considering new legislation that would require artificial intelligence chatbots to add mental health safeguards. The proposed bills would mandate that chatbots clearly disclose they are AI systems, not human healthcare providers, and create protocols to detect and respond to users expressing self-harm or suicidal ideation.

Why it matters

As AI chatbots become more advanced at mimicking human conversation, there are growing concerns about the potential for harm, especially for vulnerable users seeking mental health support or advice. This legislation aims to add transparency and safety measures to protect people, particularly young users, from relying on AI systems for sensitive health matters.

The details

House Bill 2225 and Senate Bill 5984 would require AI chatbots to notify users at the start of an interaction and every 3 hours that they are conversing with an artificial intelligence, not a human. If a user seeks mental or physical health guidance, the chatbot would have to disclose it is not a healthcare provider. Chatbot operators would also have to implement protocols to detect signs of self-harm or suicidal ideation and provide referrals to crisis services.

- The proposed legislation is currently being considered by the Washington state legislature.

The players

Bob Ferguson

The governor of Washington state who is supporting the new AI chatbot legislation.

House Bill 2225

A bill in the Washington state legislature that would mandate mental health safeguards for AI chatbots.

Senate Bill 5984

A companion bill in the Washington state senate that would also require AI chatbots to add mental health protections.

What’s next

The Washington state legislature is expected to vote on the proposed AI chatbot legislation in the coming months.

The takeaway

This legislation reflects growing concerns about the risks of AI chatbots providing mental health advice, especially to vulnerable users. By mandating transparency and safety protocols, Washington aims to protect people from potentially harmful interactions with AI systems on sensitive health matters.

Seattle top stories

Seattle events

Feb. 13, 2026

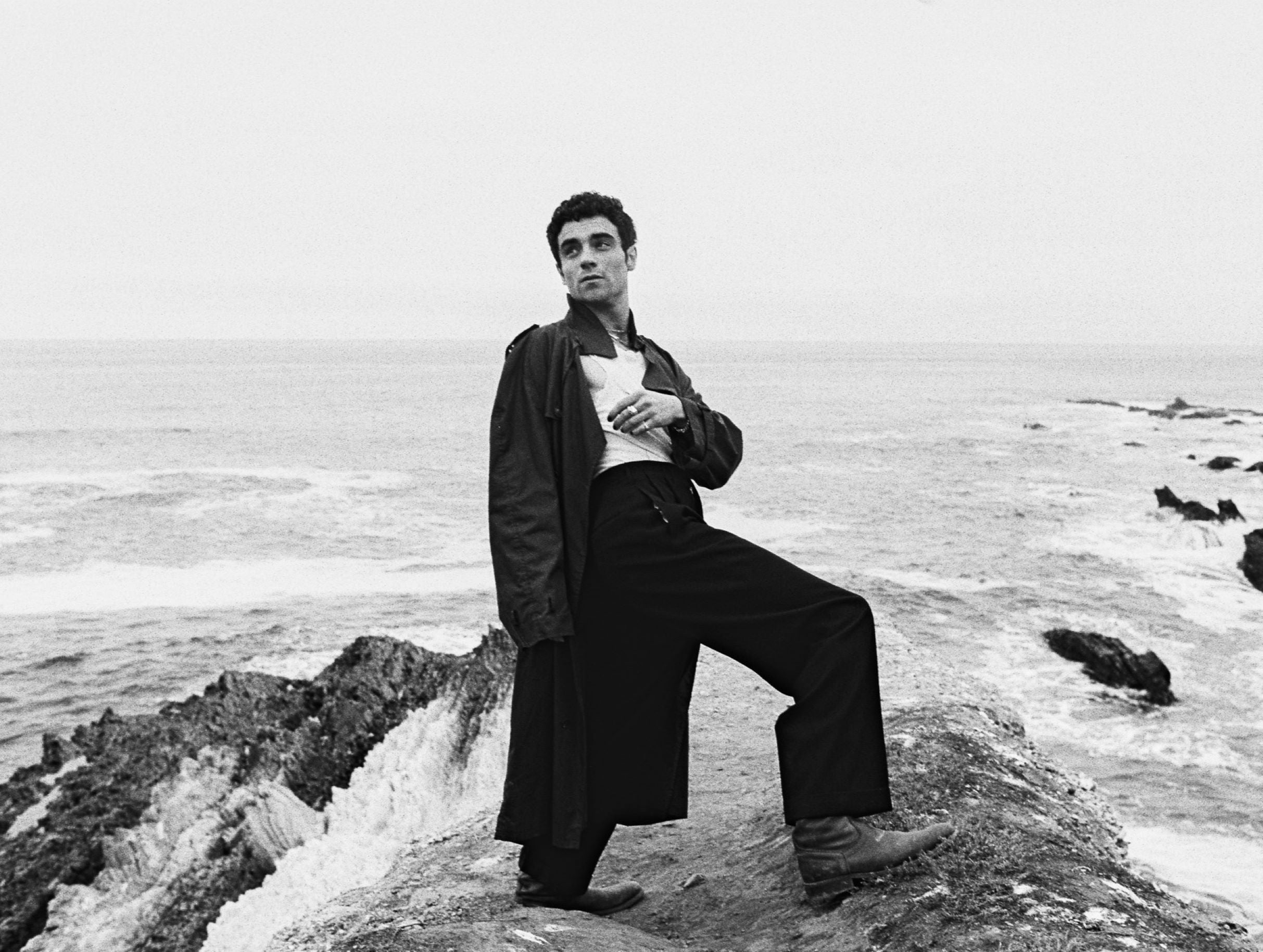

Del Water Gap w/ Hannah JadaguFeb. 13, 2026

Enjambre (21+)Feb. 13, 2026

Michael Shannon & Jason Narducy and Friends Play R.E.M.